Session 2 of the User Engagement strand looked at what makes a well-used resource, and how you go about measuring the impact your resource is having on its users using qualitative and quantitative metrics. Claire Davies from Curtis and Cartwright focused on the benefits of audience research, and Eric Meyer from their Oxford Internet Institute talked about the Toolkit for the Impact of Digitised Scholarly Resources (TIDSR) and different methods of measuring impacts. Finally, Susan Whitfield from the British Library gave an overview of the International Dunhuang Project to put the Silk Road online.

The session was chaired by Paola Marchionni, JISC Digitisation Programme Manager. She began by saying that how users engage with resources is very important, particularly if content is consolidated (how do you cater for a plurality of users?).

Claire Davies from Curtis and Cartwright kicked off by asking: Who are your audiences? She focused on the benefits of audience research, and gave an interesting case study of where audience research had been used.

Claire Davies from Curtis and Cartwright kicked off by asking: Who are your audiences? She focused on the benefits of audience research, and gave an interesting case study of where audience research had been used.

Why research your audiences – what are the benefits?

-

It gives you an evidence base to help you make better decisions, and is useful for bids for funding.

-

It allows you to find out where there’s a market. What do your audiences want?

-

It can help with deciding if you’ve got a pilot right: is it usable? Does the search engine work, is it accessible?

-

Project/service transition: it’s especially useful for future funding – have you reached your audiences? Are they satisfied?

-

It can help with ongoing improvements – what could make your service better? It can also help give priorities if you’ve got a limited budget, and target appropriate groups for marketing.

What is audience research?

Claire explained that audience research was a process of planning and conducting a programme of work to better understand audiences and audience behaviour. It’s more than just demonstrating a certain number of visitors or website hits – it can give you a rich picture of information (on customer satisfaction, for example).

She took as a case study, the British Library Archival Sound Recordings, a JISC-funded digitisation project.

They used audience research to:

-

develop and test website

-

promote the service

-

monitor use of the website

-

ensure the service is fulfulling its role

-

gather and test ideas for the future (what future collections should be digitised?)

Audience Research Lifecycle – some good practice guidance

-

Define the target audience (who is it for? – you may have groups with different needs)

-

Plan the research (set clear objectives, what questions do you need to answer – are you researching funding models? Who doesn’t use your service?)

-

Collect the research data (DIY or buy? What research methods – survey, focus groups, ethnography? Needs to be a complementary.)

-

Analyse the data to model your audience – ie model persona of a user

-

Apply and make use of research?

Claire also made the point that whether digital or non-digital, the principles of audience research are the same. There’s obviously additional research techniques available digitally (eg web statistics), and the audience is remote.

Co-existence is an interesting consideration when looking at digital and non-digital resources – some have both. The London Museums Hub looked at the primary use of its websites, and discovered that most people were coming to see when museums were open, and how to get there etc – so there was no point developing online collections.

Claire also warned against taking advantage of new technical possibilities (eg podcasts) if it wasn’t something the audience was interested in – an enhanced search engine may be more useful. Developments need to be user-led, not technology-led.

Key points

To sum up, her key points included:

-

know your audience!!

-

audience research doesn’t need to be perfect to be useful.

-

doesn’t have to be a massive project – even a small audience research project can have a big impact

-

many techniques can be implemented cheaply (piggyback on what you’re already doing – workshops, feedback from helpdesks etc)

-

audience research should be an ongoing process – it’s much more effective when it’s built into the project lifecycle

-

can help you prove value, and is useful for programme management

More information and guidance is available at https://sca.jiscinvolve.org/audience-publications/

Toolkit for the Impact of Digitised Scholarly Resources (TIDSR)

Eric Meyer from the Oxford Internet Institute then talked about the Toolkit for the Impact of Digitised Scholarly Resources, which focused on the usage and impact of a selection of JISC Phase 1 Digitisation Projects.The key recommendations to JISC and presentation/slides are available on their website, http://microsites.oii.ox.ac.uk/tidsr

The Phase 1 projects studied were:

- Online Historical Population Reports

- British Library 19th century newspaper project

- British Library archival sound recordings (phase 1)

- BOPCRIS (British Official Publications Collaborative Reader Information Service)

- Wellcome Medical Journals: Backfiles Project

The goal is for the TIDSR toolkit to grow over time and become a knowledge-base. It provides a series of articles,sources of data, etc about the different methods/different ways of measuring impacts. Eric made the point that by combining a number of methods you get a nuanced picture of the impacts.

Quantitative methods

- analytics (eg Google analytics – really useful if you have your own website). But don’t be too bogged down in these things – look at it over time, say three months or six months.

bibliometrics/science metrics (ie citations). This can be hard because there’s a bias against electronic citation in some fields (you might cite a physical newspaper rather than a digitised version of it for example). Including a recommended URL for citation on your site helps.

- log file analysis – can help understand how people move through a website, how long people spend on pages etc – can be combined with focus groups and questionnaires/surveys.

- webometrics – like bibliometrics but for links (inward and outward) for sites. These can help if you’re trying to understand relative importance/value of sites.

Qualitative methods

- content analysis of news reports (unique names are easier!)

- focus groups

- interviews with project personnel, users and non-users

- referrer analysis (for example from reading lists and libraries)

- user feedback (need to track patterns)

- audience analysis

Eric also pointed to some small, unexpected impacts that had come out of the interviews that they’d carried out:

1. The quality of undergraduate dissertation work was improved by early contact with digitised primary sources (you can virtually use materials that you’d never have been able to get access to until much later as a postgrad).

2. The types of research projects being presented at conferences were increasingly quantitative (eg text analysis on large collections).

3. There were new possibilities for serendipitous research – you can see what else is in a collection quite easily.

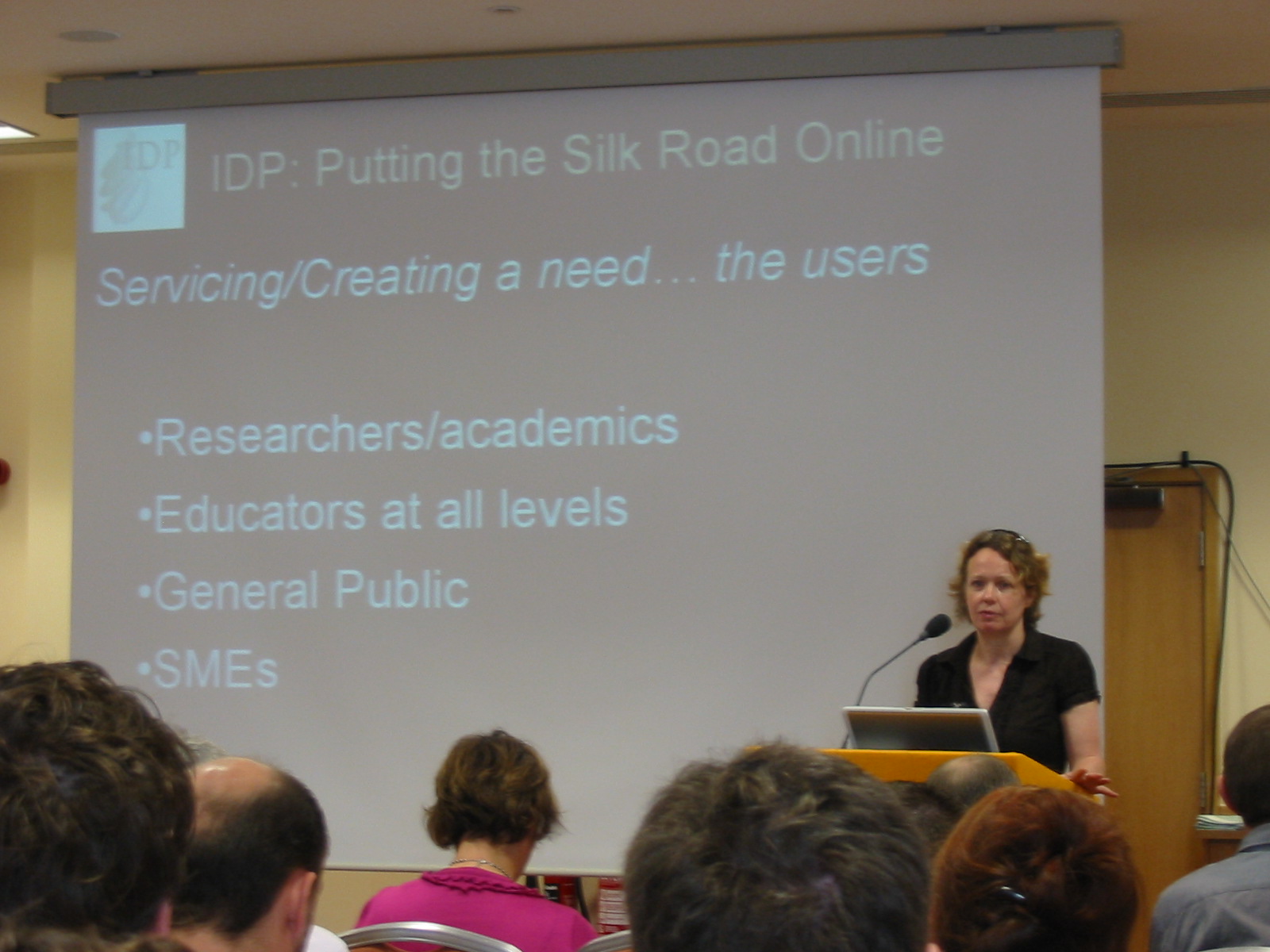

International Dunhuang Project

Finally, Susan Whitfield from the British Library spoke about the International Dunhuang Project to put the Silk Road online.

Finally, Susan Whitfield from the British Library spoke about the International Dunhuang Project to put the Silk Road online.

In the early 20th century, the Silk Road route saw a period of imperial archaeological expeditions and exploration (especially in the eastern Gobi area, where desert conditions had preserved materials).Finds were packed up and sent by camel/ship to the countries of the explorers and most ended up in public institutions.

One particular site, Dunhuang, contained a hidden, sealed cave (around 900 years old), which contained 40,000 manuscripts and paintings. Most of these are in the Bibliotheque Nationale in Paris. The finds are a very rich resource dispersed worldwide and very fragile, so an obvious one for digitsation so they can be looked at as a single entity.

The IDP was formed in 1994 to collaborate on conservation, cataloguing, digitsation, research and education. There are around 10 holding institutions worldwide with major collections and 30 other smaller holding institutions, covering over 200 archaeological sites and 300,000 (maybe 500,000) manuscripts/paintings/artefacts (of which around 100,000 are online)

The first online resource went live in 1998. The website is in all the user languages (Chinese, Russian, French, English etc), with others coming online soon. There isn’t a single definitive catalogue online – all the historical catalogues (published and unpublished) are used, and users encouraged to submit their own work too.

What makes a well-used resource?

The IDP are primary users of the site, and are very close to their users – they spend a lot of time with them at conferences etc. Susan said a well-used resource was one that was:

- visible – people have to know about it

- quality – in design and content

- usable – including issues of access

- reliable – the site must stay up

- had a clear set of users, and a clear reason for digitsation

She also made the point that while the IDP’s core set of users were researchers/academics and educators at all levels, the general public and SMEs were also targets (particularly when chasing European funding)

But there are some problems:

- you can’t try to please everyone

- some projects may not want to get more and more and more users (network capacity and servers are a problem)

- predicting the future – it’s impossible!

Questions

A lively question and answer session followed, that probed into the issues a little deeper.

Q: To the IDP project – have you considered links to research output that’s related to the subject? Or collaborate on projects like the one funded by three UK universities to create a wikipedia-style resource on the Silk Road?

Susan: Yes, we do link to research output, and invite them to send us their research projects which we put online – whether that’s projects done by ourselves, in collaboration or by people using our material. We’re also a partner with Golden Web.

Q: There’s a tension/paradox here – the more specific your collection is, and the more specific stuff you know about your audience and can use to prove funding etc the better. But what do you do about economies of scale/collaboration?

Eric: It can be hard once things are federated – are you putting them in the right places where the people you want to attract are going? Are the materials well linked to? It can be hard to get people to do things in a different way.

Q: Leeds university library were surprised to find some of their online material on leather bindings very popular….so wondering whether some of metrics can be skewed?!

Eric: This can be shown up in the qualitative data. You can then put something on your site to say – we’re really not the right place for what you’re looking for! (HistPop did this for genealogy searchers).

Claire: Don’t rely on web statistics! Combining all the data is very important, and can help with this.

Q: Can you talk about societal impact (important for ESRC-funded services, for example)?

Susan: In terms of public use of the resource- we haven’t done this formally. We’ve done it informally – via mailings, talks/lectures in the various geographical areas. Most of our sense of public use is done via logs, and feedback (80% of feedback on the site is from the general public).

Claire: Non-usage and unintentional usage is hardest to get a handle on. You have to be quite innovative (where do you stop?). You could use broader surveys (you could piggy back on for one question), rather than do the impossible.

Susan: It concerns us for EU funding bids – especially as they are focusing on increasing knowledge of this with the general public in Europe.

Q: For Claire: you use the term audience research – is this different to market research (which can have slightly negative connotations!) Do we have to be careful about approaching market research firms, and are there words of caution?

Claire: In terms of what it’s called market research seems to be a private-sector term. There’s not a huge amount of difference. If you do hire people in, you need a good knowledge of what you want out of it. The onus is on you to find out what you want, and make sure people deliver.

Q: Looking at this research and evaluation, we’ve not talked about whether there’s a policy on open access to raw/digested data?

Eric: We’re all for openness: our data/results are there for all to use. There is a general trend towards more openness.

Summary: some top tips from the panel!

Eric: Have people involved who are users/creators of the resource, and experts in the content.

Claire: Don’t underestimate the challenge of developing digital services – it’s every bit as hard as the physical world. But get stuck in, and do stuff within your limitations.

Susan: Listen to your users but don’t be bullied by them (it’s like letters to newspapers – they’re not a representative sample!).